2021 in Review

tl;dr I learned more, emotions are funny, and COVID still kicks.

December 24, 2021

December 24, 2021

My friend Tim, among others like Dan Wang, writes an annual letter. I decided that I should do the same, since I'm just a high-stressed munchkin that never looks backwards.

What a whirlwind it has been these past 12 months.

2021 was a year of new beginnings and reincarnated challenges. It was the first full calendar year that I spent outside of Korean military service, and the first time that I stepped back onto American soil since 2018. It was a year of seeing old faces (in person!), mending worn relationships, and igniting new friendships. [1]

COVID continues to ravage the world since it first struck in the winter of 2019, and the emotional rollercoaster doesn't seem to be ending anytime soon. During the summer, we rejoiced and placed our faith in vaccines to stop COVID dead in its tracks - but the colder seasons smothered that faith, with COVID variants such as Delta and Omicron continuing to emerge. Still, like the young David's message to Goliath, we tell COVID: "the Lord will deliver you into my hand, and I will strike you down." [2]

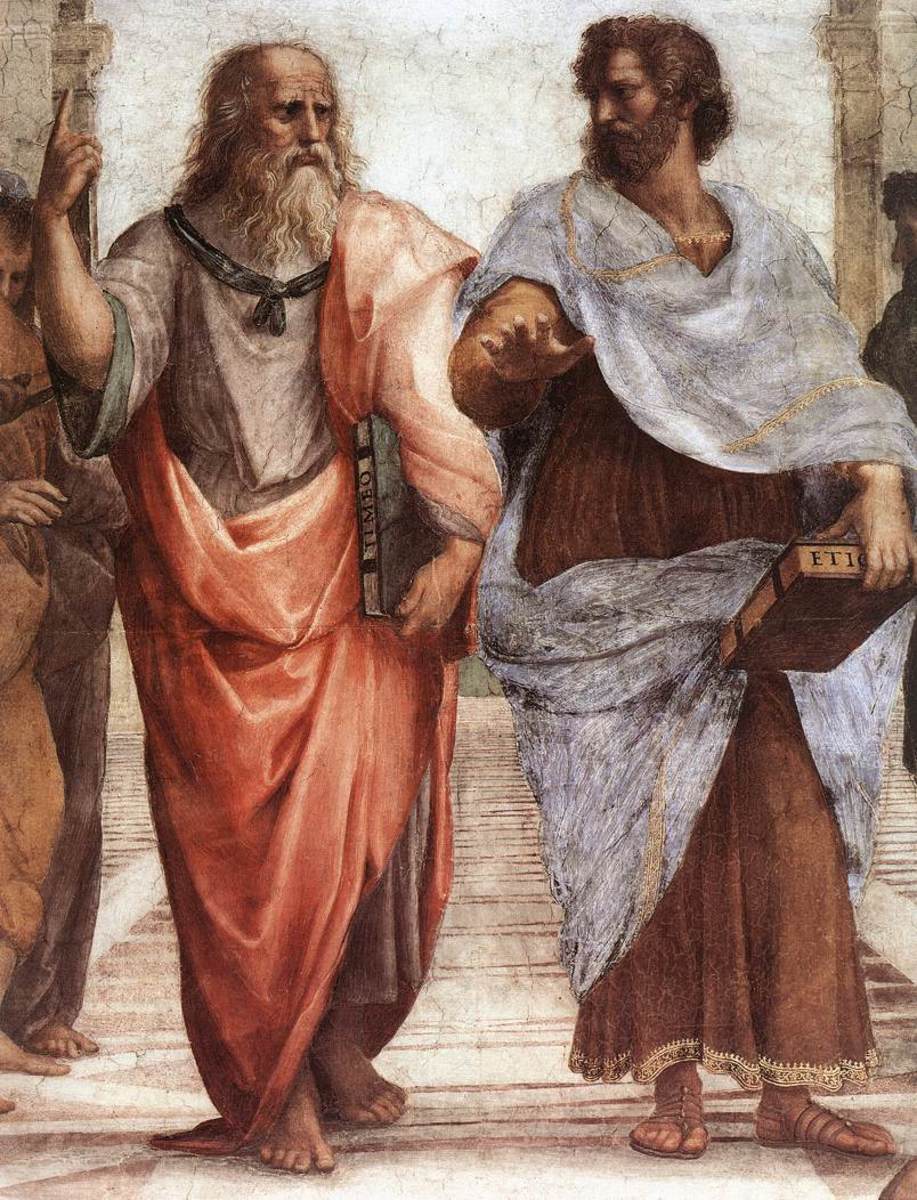

Having been a math and philosophy person for my entire life, I resolved to focus less on the abstract and more on the concrete. [3] I read the news more often and explored personally unfamiliar fields: economics, anthropology, sociology, business, finance, history, etc. I'm pleased to report that my efforts are not going to waste - I'm able to examine ideas in more diverse perspectives, and engage in conversations that I previously had no idea what to think. There's still so, so much to learn, but I'm excited to see how my thought process changes throughout the rest... of my lifetime.

Paradoxes continue to be the framework on which my thought processes are based. I live for the absolute euphoria that comes from making cross-disciplinary connections via paradoxical thinking.

I remain scattered in my interests. This year, I contemplated taking up visual art, architecture, stand-up comedy, and a smattering of others. Despite my disorganized half-ambitions, I am still guided by the same essential questions:

It embarrasses me to admit it, but one of the most memorable moments of the year was seeing my grandmother smile from cheek-to-cheek. I unfortunately have a bad habit of not looking elders in the eye, but that one twinkle of her smile cured me. I was surprised by how similar her smile was to mine - the same disappearing of the eyes [4], the same curvature of the lips, etc. I've always felt a little afraid (?) around my grandparents, but her smile reminded me that I need to make a better effort to understand where I come from. They were once my age, anyways.

I'm thankful for COVID for bringing my family some much-needed time together. This past year was the longest stretch of time that I spent at home since I left in ninth grade. It was no surprise that it took some time to adjust. I'd changed a lot as a person from 2013 to 2021, so the lenses through which my family viewed me and vice versa were frozen in time. Regardless, family is family, so the transition happened with relatively few bumpy bits along the way.

Ever since I read James C. Scott's Seeing Like A State, legibility has been on my mind.

There's no question that there exists a contradiction between legibility/standardization and diversity. Both have their pros and cons. In fact, diversity excels where legibility fails, and vice versa.

Legibility is useful in convenience-first contexts, since it eliminates the need to convert between different forms. However, standardized forms are one-size-fits-all by definition, which means that there exists a limit to how effectively they can address a problem domain.

In contrast, this is where diversity excels. Specialized tools, when solving the problems for which they are built, will always outperform general-purpose tools. Nonetheless, diversity necessitates that extra step of conversion.

When power comes into the picture, things get more complicated. In particular, installing a standardized system requires a force that's able to impose its strength over all other forces i.e. a centralizing force. Diversity doesn't call for a power like this.

Suddenly, standardization feels dangerous. Having to be subservient to a centralizing power in exchange for the comfort of not having to convert between different forms? Most people would say no.

But wait! This tension has reared its head over and over again throughout history, so we shouldn't be so quick to judge. Consider the following examples/scenarios:

This isn't to say that centralization is a silver bullet to all problems. The answer is, of course, context-dependent. All I'm trying to demonstrate is that centralizing isn't a bad thing all the time. The canonical Spiderman aphorism - "with great power comes great responsibility" - rings true. [6] Having your own power is great, until the maintenance fees come due.

The naturalistic fallacy is when we assume that natural implies good. Philosophers generally call this "deriving an ought from an is".

I've been wondering why the naturalistic fallacy exists, and I think it has something to do with our current understanding of biology.

Like math, biology occupies a weird place among the sciences. It seems to be the only science that can't be explained mechanistically. In contrast, we talk about chemical reactions as a function of molecular properties - not as a function of what the reaction is supposed to achieve. For physics also, we say "electrons are attracted to protons" instead of "electrons are attracted to protons so that something else may occur". [7]

But in biology, we're taught that a bird's beak is shaped so that it can crack open nuts better. We're taught that chameleons can change color so that it can hide better. See the difference?

We understand biology as a teleological science. But is there really any other way to understand it? Without teleology, how can you explain nature's extraordinary regularity or its magnificent diversity?

You'll undoubtedly shove the central dogma of biology in my face. But DNA, RNA, or proteins can't explain seemingly perfect adaptations - opposable thumbs, camouflage, a woodpecker's skull, etc. In the current theory, the central dogma is akin to a computer following instructions. But where did these instructions come from, and why are they written in the way that they are?

Again, you'll object by gesturing towards natural selection. [8] Although powerful, natural selection actually relies on teleology. We say that an adaptation formed because it was advantageous to its possessors. But our definition of "advantageous" depends on how we understand the adaptation currently. In essence, we're retro-projecting - we infer a past event based on the present state.

Biology can't seem to escape from teleology; the world seems to have been designed by an omniscient designer who uses natural selection as his primary tool.

The naturalistic fallacy is so potent because we understand biology as teleological. An all-knowing master designer, creating life that is so perfectly well-adapted... how could this master designer be evil? Surely what this designer has built must be more "good" than any of our creations?

This means that the naturalistic fallacy has everything to do with religion, but in a different form. Post-Kuhn, we often call science a religion, and biology is the canonical science-religion because it is teleological.

In Seeing Like A State, Scott claims that a state can only see legible things. Therefore, it must spend most of its early days trying to make illegible things legible.

I originally thought that AI's main strength was in legible-izing any illegible construct. With enough data, we can train any model to detect patterns or recognize a form. AI is an adaptable tool that'll better preserve "local" knowledge while still being able to extract useful information i.e. legible-ize local information.

While this may remain a core strength, I remembered the fundamental question of any technology: does it free up time and cognitive resources? There's a reason every technological boost appears to have socioeconomic implications.

Invented during the first Industrial Revolution, the power loom saved both time and cognitive resources. Time - because the loom was much faster than human weavers. Cognitive resources - because its speed reduced the demand for skilled weavers, and child employment in power loom mills rose. [10]

Another canonical example of technology: computing devices e.g. the abacus, the logarithmic slide rule, mechanical calculators, electronic calculators, computers etc. All reduce time and cognitive strain.

All of this is to say: machine learning's main strength is in freeing up time and cognitive resources, so that people can better focus on the issues that matter. Sure, deep learning techniques continue to produce stunning results by the day. But any startup that claims that their artificial intelligence is the "future" is missing the point. It's the future in the sense that that's how mundane tasks will be completed - but it's not how future solutions to future problems will look.

Let me provide an example. The chief pitfall of machine learning is that you need a lot of data. The more data you have, the better your model will be. [11] However, "ground-truth" data will always be unbalanced - your model will predict everyday, mundane events far better than black swan events because your data is inherently skewed. There are techniques for dealing with unbalanced data, but let's face it: smart techniques are for the resource-poor, and they will never yield comparatively better results.

But that's okay. Leave the mundane tasks to the computers, and let the humans work at the more important problems. I don't know what future solutions to future problems will look like, but human contributions will definitely be present.

I don't usually form New Year's resolutions and I don't intend to start now. My listing these "goals" here is more for accountability than anything else.

This year, I aim to spend more time in reflection. I'm very high strung, and anyone who has lived with me will tell you that I'm constantly stressed. I flipped through my journal entries for the year and discovered that almost every single entry was about needing to be better!

That's not great. Something needs to change, clearly.

Storytime. In the military, every soldier goes through five weeks of basic training. Once education is complete, soldiers are allowed five hours with their families before getting assigned to their bases. For me, the five weeks of training felt like an eternity, but the five hours of bliss disappeared in a *snap*. As I walked back to the camp, thinking of the hell ahead, my father told me, "You need to look forwards and backwards - forwards to motivate you, backwards to know you're going in the right direction."

This leads to a more general piece of advice for my future self: When regretful, look forwards. When anxious, look backwards. When rushed, look far. When overwhelmed, look close.

For most of my life, I've seeked to understand all sides but was content to stop there - all the while forgetting to form an opinion myself. Common-sense dictates that this isn't great in the long term.

In poker, it's usually better to fold or raise than to call. A random walk with unequal probabilities of going left/right will most definitely travel further than a simple random walk will.

At the same time, though, it's critical to recognize that directionality isn't always a winning play. Sometimes, the optimal strategy is the opposite: go forth and diversify. But, for the time being, I need to form opinions and justify/update them.

In particular, my opinions must be verifiable! I usually gravitate towards more philosophical questions, and philosophical questions aren't usually verifiable. Hence, not only must I form actual opinions, I must form opinions on questions that are empirically or logically verifiable.

I look forward to the coming year. See you all soon.